MattGPT: Local Agentic AI Research Platform

Free and open-source research automation powered by local AI models

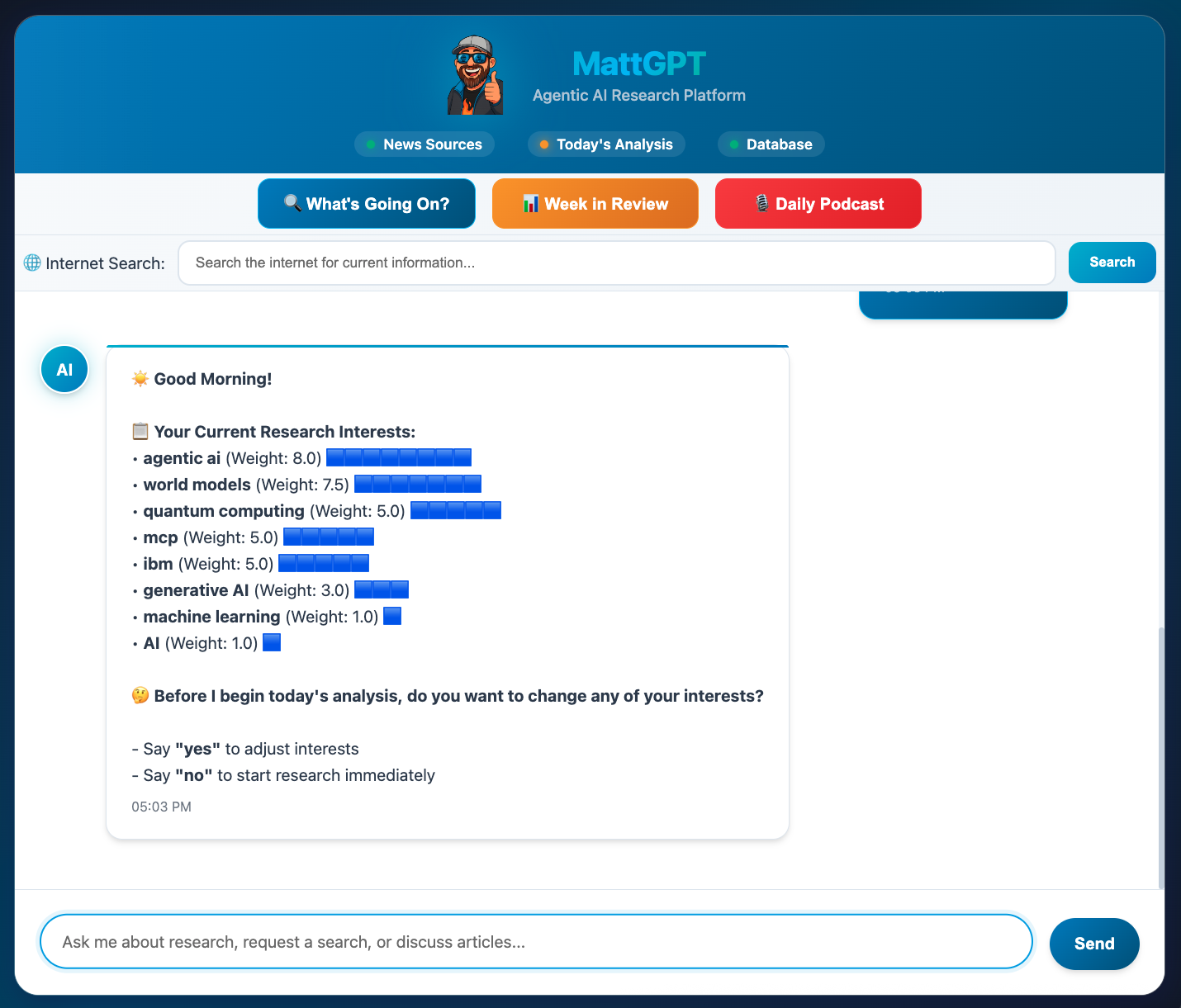

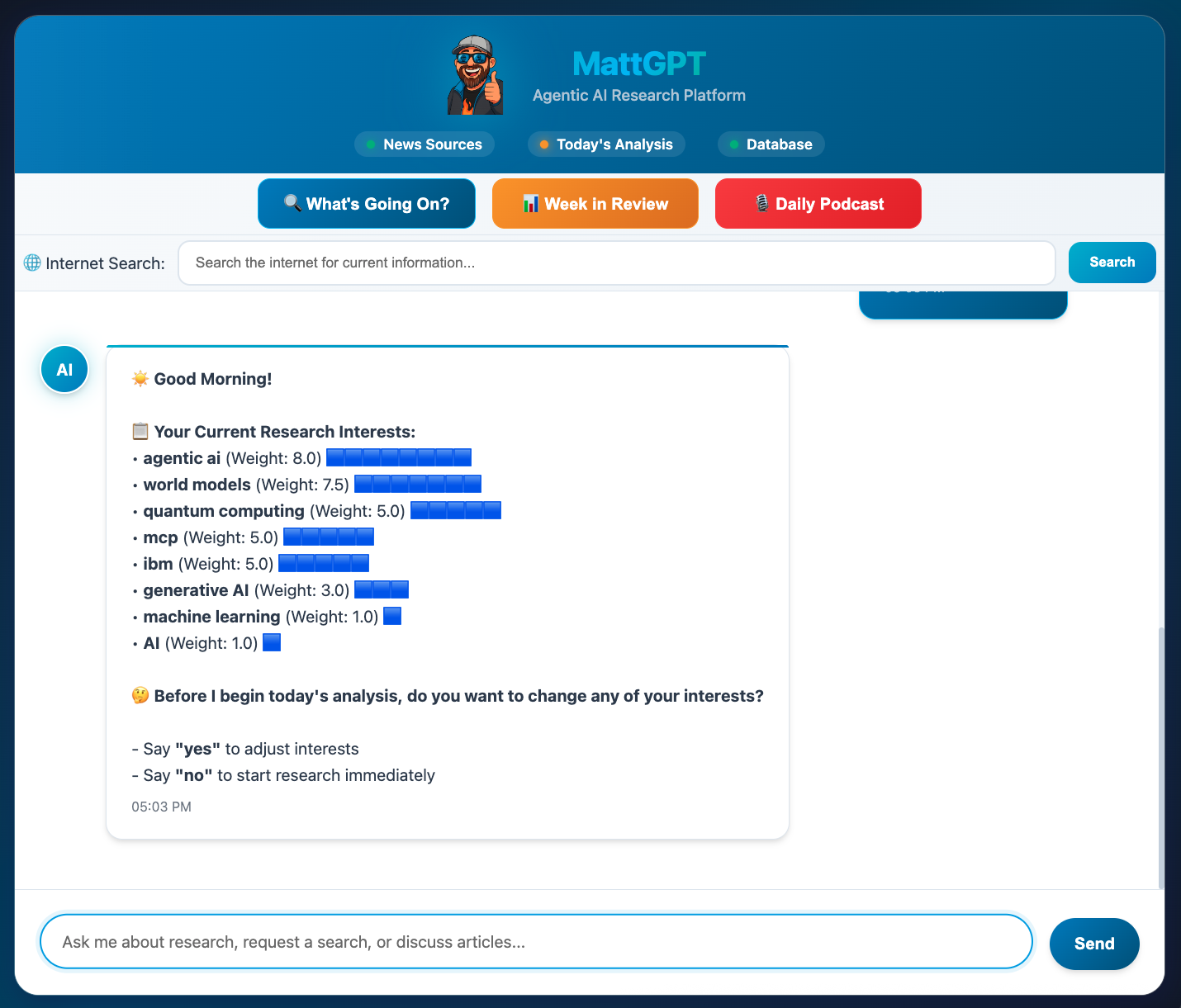

Agentic AI Research Platform

Your intelligent research companion powered by IBM's Granite 3.2 LLM. Experience Two-Track Architecture combining chain operations for complex workflows with enhanced chat for conversational interactions, all running locally on your machine.

Free and open-source research automation powered by local AI models

Discover our AI-generated podcasts featuring Edge-TTS dual voice synthesis (Alex and Sam personas) discussing trending technology and business topics from current events analysis.

Explore comprehensive weekly analyses with LLM-powered keyword consolidation, trend analysis, and interactive D3.js word cloud visualizations from 7 days of current events data.

Complete documentation to help you get started with MattGPT's features and customization options.

⚠️ Advanced Setup Required - This is a local AI research platform running entirely on your computer

ollama pull granite3.2:8b (IBM's Granite 3.2)python3.12 -m venv .venvsource .venv/bin/activate (Mac/Linux) or .venv\Scripts\activate (Windows)pip install -r requirements.txtsources_config.json to customize RSS feeds and search providers./MattGPT.sh or python app.pyhttp://localhost:5000MattGPT demonstrates that sophisticated AI research workflows can run entirely locally without cloud dependencies. Use AI assistants like Claude or ChatGPT to help troubleshoot and customize the system, but maintain full control over your data and processing.

MattGPT implements a sophisticated Two-Track Architecture enabling both complex automated workflows and conversational interactions, all powered by local AI models.

Explore comprehensive technical documentation including system architecture diagrams, API endpoints, database schemas, and implementation details for every component.

📖 View Full Architecture Documentation